Data is a Team Sport: One on One with Daniela Lepiz

Dirk Slater - July 3, 2017 in Community, Data Blog, Event report

Data is a Team Sport is a series of online conversations held with data literacy practitioners in mid-2017 that explores the ever evolving data literacy eco-system.

To subscribe to the podcast series, cut and paste the following link into your podcast manager : http://feeds.soundcloud.com/users/soundcloud:users:311573348/sounds.rss or find us in the iTunes Store and Stitcher.

[soundcloud url=”https://api.soundcloud.com/tracks/331054739″ params=”color=ff5500&auto_play=false&hide_related=false&show_comments=true&show_user=true&show_reposts=false” width=”100%” height=”166″ iframe=”true” /]

This episode features a one on one conversation with Daniela Lepiz, a Costa Rican data journalist and trainer, who is currently the Investigation Editor for CENOZO, a West African Investigative Journalism Project that aims to promote and support cross border data investigation and open data in the region. She has a masters degree in data journalism from the Rey Juan Carlos University in Madrid, Spain. Previously involved with OpenUP South Africa working with journalists to produce data driven stories. Daniela is also a trainer for the Tanzania Media Foundation and has been involved in many other projects with South African Media, La Nacion in Costa Rica and other international organisations.

This episode features a one on one conversation with Daniela Lepiz, a Costa Rican data journalist and trainer, who is currently the Investigation Editor for CENOZO, a West African Investigative Journalism Project that aims to promote and support cross border data investigation and open data in the region. She has a masters degree in data journalism from the Rey Juan Carlos University in Madrid, Spain. Previously involved with OpenUP South Africa working with journalists to produce data driven stories. Daniela is also a trainer for the Tanzania Media Foundation and has been involved in many other projects with South African Media, La Nacion in Costa Rica and other international organisations.

Notes from the conversation

Daniela spoke to us from Burkina Faso and reflected on the importance of data-driven journalism in holding power to accountability. Her project aims to train and support journalists working across borders in West Africa to use data to expose corruption and human rights violation. To identify journalists to participate in the project, they seek individuals who are experienced, passionate and curious. The project engages media houses, such as Premium Times in Nigeria, to ensure that there are respected outlets to publish their stories. Daniela raised the following points:

- As the media landscape continues to evolve, data literacy is increasing becoming a required competency

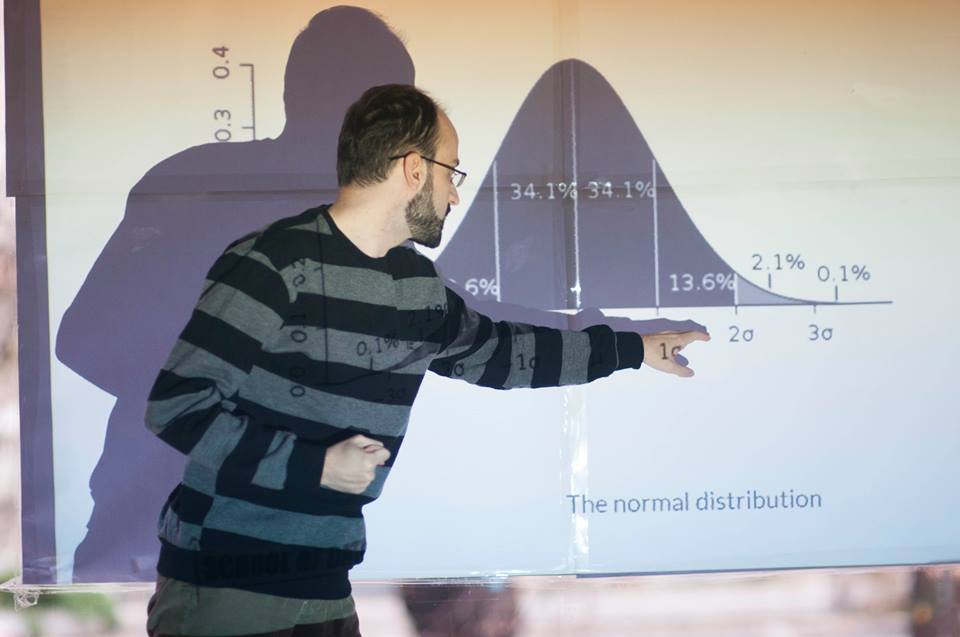

- Journalists do not necessarily have a background in mathematics or statistics and are often intimidated by the idea of having to these concepts in their stories.

- Data stories are best done in teams of people with complementary skills. This can go against a traditional approach to journalism in which journalists work alone and tightly guard their sources.

- It is important that data training programmes also work with, and better understand the needs of journalists.

Resources she finds inspiring

- The Quartz Guide to Bad Data

- Coursera’s Launch Your Career in Data Science

- Code4SA: QUICK AND DIRTY MAP VIZ

Her blogs posts

- Code4SA: Too much data? Use the command line

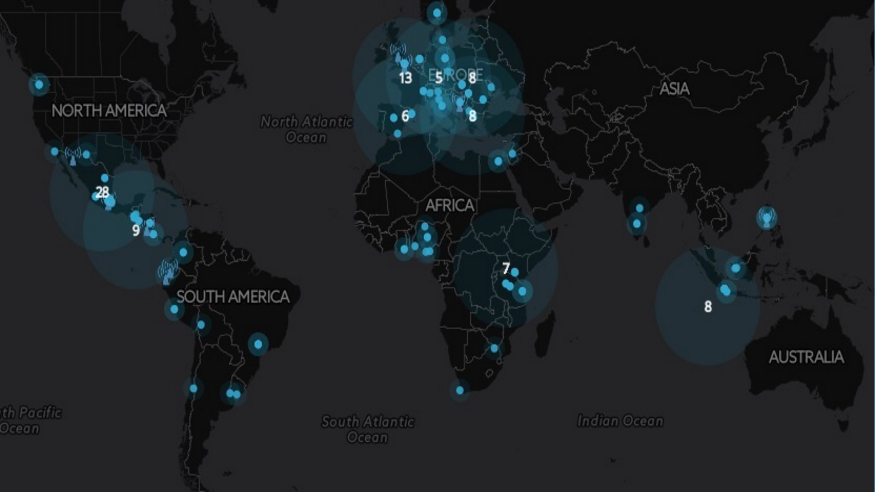

- Code4SA: AFRICA MIGRATION AND BRAIN DRAIN (HOW I NAVIGATED A DATA NIGHTMARE)

- Cod4SA: Why should journalists care about url patterns?

The full online conversation:

[youtube https://www.youtube.com/watch?v=9l4SI6lm130]

Daniela’s bookmarks!

These are the resources she uses the most often.

.Rddj – Resources for doing data journalism with RComparing Columns in Google Refine | OUseful.Info, the blog…Journalist datastores: where can you find them? A list. | Simon RogersAidInfoPlus – Mastering Aid Information for Change

Data skills

- Mapping tip: how to convert and filter KML into a list with Open Refine | Online Journalism Blog

- Mapbox + Weather Data

- Encryption, Journalism and Free Expression | The Mozilla Blog

- Data cleaning with Regular Expressions (NICAR) – Google Docs

- NICAR 2016 Links and Tips – Google Docs

- Teaching Data Journalism: A Survey & Model Curricula | Global Investigative Journalism Network

- Data bulletproofing tips for NICAR 2016 – Google Docs

- Using the command line tabula extractor tool · tabulapdf/tabula-extractor Wiki · GitHub

- Talend Downloads

-

Github

- Git Concepts – SmartGit (Latest/Preview) – Confluence

- GitHub For Beginners: Don’t Get Scared, Get Started – ReadWrite

- Kartograph.org

- LittleSis – Profiling the powers that be

-

Tableau customized polygons

- How can I create a filled map with custom polygons in Tableau given point data? – Stack Overflow

- Using Shape Files for Boundaries in Tableau | The Last Data Bender

- How to make custom Tableau maps

- How to map geographies in Tableau that are not built in to the product (e.g. UK postcodes, sales areas) – Dabbling with Data

- Alteryx Analytics Gallery | Public Gallery

- TableauShapeMaker – Adding custom shapes to Tableau maps | Vishful thinking…

- Creating Tableau Polygons from ArcGIS Shapefiles | Tableau Software

- Creating Polygon-Shaded Maps | Tableau Software

- Tool to Convert ArcGIS Shapefiles into Tableau Polygons | Tableau and Behold!

- Polygon Maps | Tableau Software

- Modeling April 2016

- 5 Tips for Making Your Tableau Public Viz Go Viral | Tableau Public

- Google News Lab

- HTML and CSS

- Open Semantic Search: Your own search engine for documents, images, tables, files, intranet & news

- Spatial Data Download | DIVA-GIS

- Linkurious – Linkurious – Understand the connections in your data

- Apache Solr –

- Apache Tika – Apache Tika

- Neo4j Graph Database: Unlock the Value of Data Relationships

- SQL: Table Transformation | Codecademy

- dc.js – Dimensional Charting Javascript Library

- The People and the Technology Behind the Panama Papers | Global Investigative Journalism Network

- How to convert XLS file to CSV in Command Line [Linux]

- Intro to SQL (IRE 2016) · GitHub

- Malik Singleton – SELECT needle FROM haystack;

- Investigative Reporters and Editors | Tipsheets and links

- Investigative Reporters and Editors | Tipsheets and Links

-

SQL_PYTHON

-

More data

- 2016-NICAR-Adv-SQL/SQL_queries.md at master · taggartk/2016-NICAR-Adv-SQL · GitHub

- advanced-sql-nicar15/stats-functions.sql at master · anthonydb/advanced-sql-nicar15 · GitHub

- 2016-NICAR-Adv-SQL/SQL_queries.md at master · taggartk/2016-NICAR-Adv-SQL · GitHub

- Malik Singleton – SELECT needle FROM haystack;

- Statistical functions in MySQL • Code is poetry

- Data Analysis Using SQL and Excel – Gordon S. Linoff – Google Books

- Using PROC SQL to Find Uncommon Observations Between 2 Data Sets in SAS | The Chemical Statistician

- mysql – Query to compare two subsets of data from the same table? – Database Administrators Stack Exchange

- sql – How to add “weights” to a MySQL table and select random values according to these? – Stack Overflow

- sql – Fast mysql random weighted choice on big database – Stack Overflow

- php – MySQL: Select Random Entry, but Weight Towards Certain Entries – Stack Overflow

- MySQL Moving average

- Calculating descriptive statistics in MySQL | codediesel

- Problem-Solving using Graph Traversals: Searching, Scoring, Ranking, …

- R, MySQL, LM and quantreg

- 26318_AllText_Print.pdf

- ddi-documentation-english-572 (1).pdf

- Categorical Data — pandas 0.18.1+143.g3b75e03.dirty documentation

- python – Loading STATA file: Categorial values must be unique – Stack Overflow

- Using the CSV module in Python

- 14.1. csv — CSV File Reading and Writing — Python 3.5.2rc1 documentation

- csvsql — csvkit 0.9.1 documentation

- weight samples with python – Google Search

- python – Weighted choice short and simple – Stack Overflow

- 7.1. string — Common string operations — Python v2.6.9 documentation

- Introduction to Data Analysis with Python | Lynda.com

- A Complete Tutorial to Learn Data Science with Python from Scratch

- GitHub – fonnesbeck/statistical-analysis-python-tutorial: Statistical Data Analysis in Python

- Verifying the email – Email Checker

- A little tour of aleph, a data search tool for reporters – pudo.org (Friedrich Lindenberg)

- Welcome – Investigative Dashboard Search

- Investigative Dashboard

- Working with CSVs on the Command Line

- FiveThirtyEight’s data journalism workflow with R | useR! 2016 international R User conference | Channel 9

- Six issue when installing package · Issue #3165 · pypa/pip · GitHub

- python – Installing pip on Mac OS X – Stack Overflow

- Source – Journalism Code, Context & Community – A project by Knight-Mozilla OpenNews

- Introducing Kaggle’s Open Data Platform

- NASA just made all the scientific research it funds available for free – ScienceAlert

- District council code list | Statistics South Africa

- How-to: Index Scanned PDFs at Scale Using Fewer Than 50 Lines of Code – Cloudera Engineering Blog

- GitHub – gavinr/geojson-csv-join: A script to take a GeoJSON file, and JOIN data onto that file from a CSV file.

- 7 command-line tools for data science

- Python Basics: Lists, Dictionaries, & Booleans

- Jupyter Notebook Viewer

-

PYTHON FOR JOURNALISTS

-

New folder

- Reshaping and Pivot Tables — pandas 0.18.1 documentation

- Reshaping in Pandas – Pivot, Pivot-Table, Stack and Unstack explained with Pictures – Nikolay Grozev

- Pandas Pivot-Table Example – YouTube

- pandas.pivot_table — pandas 0.18.1 documentation

- Pandas Pivot Table Explained – Practical Business Python

- Pivot Tables In Pandas – Python

- Pandas .groupby(), Lambda Functions, & Pivot Tables

- Counting Values & Basic Plotting in Python

- Creating Pandas DataFrames & Selecting Data

- Filtering Data in Python with Boolean Indexes

- Deriving New Columns & Defining Python Functions

- Python Histograms, Box Plots, & Distributions

- Resources for Further Learning

- Python Methods, Functions, & Libraries

- Python Basics: Lists, Dictionaries, & Booleans

- Real-world Python for data-crunching journalists | TrendCT

- Cookbook — agate 1.4.0 documentation

- 3. Power tools — csvkit 0.9.1 documentation

- Tutorial — csvkit 0.9.1 documentation

- 4. Going elsewhere with your data — csvkit 0.9.1 documentation

- 2. Examining the data — csvkit 0.9.1 documentation

- A Complete Tutorial to Learn Data Science with Python from Scratch

- For Journalism

- ProPublica Summer Data Institute

- Percentage of vote change | CARTO

- Data Science | Coursera

- Data journalism training materials

- Pythex: a Python regular expression editor

- A secure whistleblowing platform for African media | afriLEAKS

- PDFUnlock! – Unlock secured PDF files online for free.

- The digital journalist’s toolbox: mapping | IJNet

- Bulletproof Data Journalism – Course – LEARNO

- Transpose columns across rows (grefine 2.5) ~ RefinePro Knowledge Base for OpenRefine

- Installing NLTK — NLTK 3.0 documentation

- 1. Language Processing and Python

- Visualize any Text as a Network – Textexture

- 10 tools that can help data journalists do better work, be more efficient – Poynter

- Workshop Attendance

- Clustering In Depth · OpenRefine/OpenRefine Wiki · GitHub

- Regression analysis using Python

- DataBasic.io

- DataBasic.io

- R for Every Survey Analysis – YouTube

- Git – Book

- NICAR17 Slides, Links & Tutorials #NICAR17 // Ricochet by Chrys Wu

- Register for Anonymous VPN Services | PIA Services

- The Bureau of Investigative Journalism

- dtSearch – Text Retrieval / Full Text Search Engine

- Investigation, Cybersecurity, Information Governance and eDiscovery Software | Nuix

- How we built the Offshore Leaks Database | International Consortium of Investigative Journalists

- Liz Telecom/Azimmo – Google Search

- First Python Notebook — First Python Notebook 1.0 documentation

- GitHub – JasonKessler/scattertext: Beautiful visualizations of how language differs among document types

participants share their ideas on how to approach different datasets and how to feed this into an official open data portal that can carry all these datasets together. One of the participants, Aymen Latrach, discussed the problems his team faces when it comes to data transparency about extractives in

participants share their ideas on how to approach different datasets and how to feed this into an official open data portal that can carry all these datasets together. One of the participants, Aymen Latrach, discussed the problems his team faces when it comes to data transparency about extractives in